During the penultimate week of June 2017, temperatures of thirty degrees Celsius or more were recorded across the UK for five days straight. It was the hottest continuous spell of weather in the country since the 70s. And while this may sound like a minor headline, it’s evidence of an important fact: the world is getting warmer.

According to the Met Office, experiencing a ‘very hot’ summer is now likely to occur every five years rather than every 50. By the 2040s, more extreme heatwaves could become commonplace, and this could have serious consequences.

The extreme heatwave that hit Europe in 2003 led to a death toll in the tens of thousands and placed extreme strain on the continent – not only on its people, but on its infrastructure, too. If this weather is set to continue, what does it mean for our electricity network?

Electricity in extreme weather

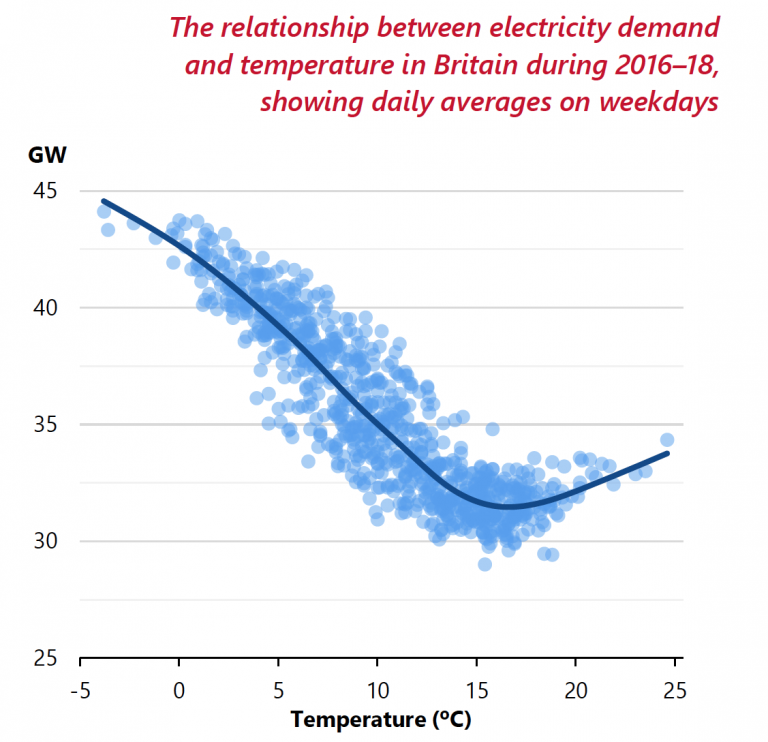

In hot countries, electricity use soars in times of extreme weather due to increased use of cooling devices like air conditioning. One US study predicted an extreme temperature upswing could drive as much as an 7.2% increase in US peak demand.

In Northern Europe including the UK, where air conditioning is less prevalent, the effects of heat aren’t as pronounced, but that could change. In France, hot weather is estimated to have contributed to a 2 GW increase in demand this June.

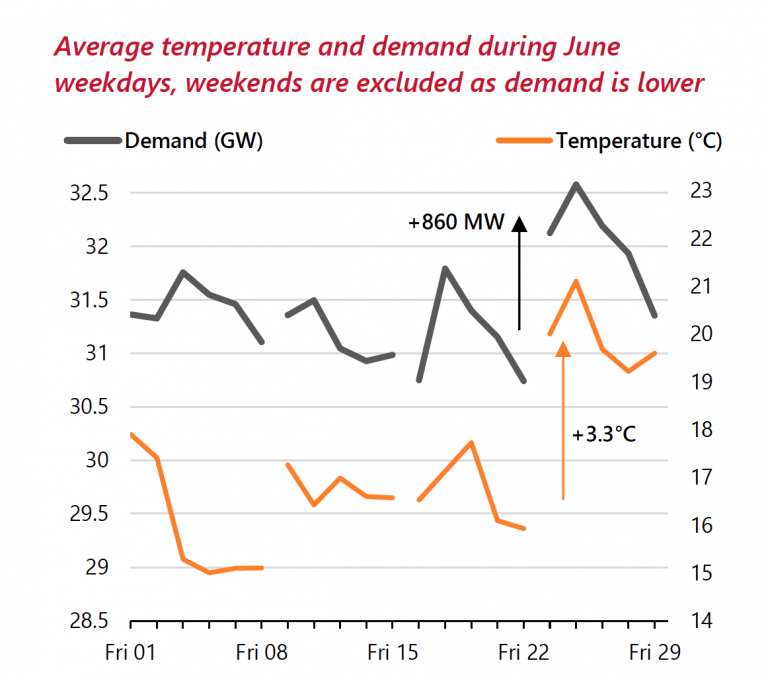

The UK, which has traditionally only seen demand swings due to cold weather, is also beginning to feel the effects of extreme heat. According to Dr Iain Staffell of Imperial College London, for every degree rise in temperature during June 2017, electricity demand rose by 0.9% (300 MW). For example, on 19th June, when temperature averaged 21.9 degrees Celsius demand reached 32 GW. On the 25th, when temperatures dropped to an average of 15.9 degrees, demand shrank to 26.6 GW.

In the very hottest days of summer this can mean the grid needs to deliver an additional 1.5 GW of power – equivalent to the output of five rapid-response gas power stations or two-and-a-half biomass units at Drax Power Station.

And while heat’s effect on demand is considerable, it’s not the only one it has on electricity.

The problem of cooling water in hot weather

Generating power doesn’t just need fuel, it’s also a water-intensive process. Power stations consume water for two main reasons: to turn into steam to drive generation turbines, and to cool down machinery.

Both rely on raising the temperature of the water. However, this water can’t simply be released back into a river or lake after use – even if nothing has been added to it – as warm water can negatively affect wildlife living in these habitats. First, it has to be cooled – normally via cooling towers – but in hot weather this takes longer and, as a result, power production becomes less efficient and in some cases, plant output must be dialled back.

This had serious consequences for France’s nuclear power plants during the 2003 heatwave. These plants – which provide roughly 75% of the country’s electricity – draw water from nearby rivers to cool their reactors. During the heatwave, however, these rivers were both too hot and too low to safely provide water for the cooling process, which in turn led to the power stations having to either close or drastically reduce capacity.

Coupled with increased demand, France was left on the verge of a large-scale black out. Situations like this are even more critical when considering heat’s effects on electricity’s motorway: the national grid.

How the hot weather impacts electricity

When materials get hot, they expand – this includes those electricity grids are made from. For example, overhead power transmission cables are often clad in aluminium, which is particularly susceptible to expansion in heat. When it expands, overhead lines can slacken and sag, which increases electrical resistance in the cables, leading to a drop in efficiency.

Transformers, which step up and down voltage across grids, are also susceptible. They give off heat as a by-product of their operations. But to keep them within a safe level of operation, they have what’s known as a power rating – the highest temperature at which they can safely function.

When ambient temperatures rise, this ceiling gets lower and their efficiency drops – about 1% for every one degree Celsius gain in temperature. At scale, this can have a significant effect: overall, grids can lose about 1% in efficiency for every three degrees hotter it gets.

As global temperatures continue to rise, these challenges could grow more acute. At UK power stations, such as Drax, important upgrade and maintenance work takes place during the quieter summer months. If this period becomes one in which there is a higher demand for power at peak times, it could lead to new challenges.

Investing in infrastructure and building a power generation landscape that includes a mix of technologies and meets a variety of grid needs is one way in which we can counter the challenges of climate change. This will mean we can not only move towards a lower carbon economy and contribute towards slowing global warming, but respond to climate change by adapting essential national infrastructure to deal with its effects.